- AI Sidequest: How-To Tips and News

- Posts

- This AI bug turned 'ace up his sleeve' into ... you don’t want to know

This AI bug turned 'ace up his sleeve' into ... you don’t want to know

Gmail's translation errors are hilariously bad (and still happening).

Issue 71

On today’s quest:

— Get a reverse outline with Storysnap

— Customize your NotebookLM audio overviews

— The downtime problem

— A new, prominent case of AI psychosis?

— Don’t believe everything ChatGPT tells you

— Gmail translation errors

— More hallucinations in the courts

— AI is an “arrival technology”

Note: I just got access to ChatGPT Agent late last night and hope to have a review for you in a week or so. I’m also hearing rumors that GPT-5 and other major models may launch this week to get ahead of EU regulatory changes too, so it could be a big week. We’ll see!

Get a reverse outline with Storysnap

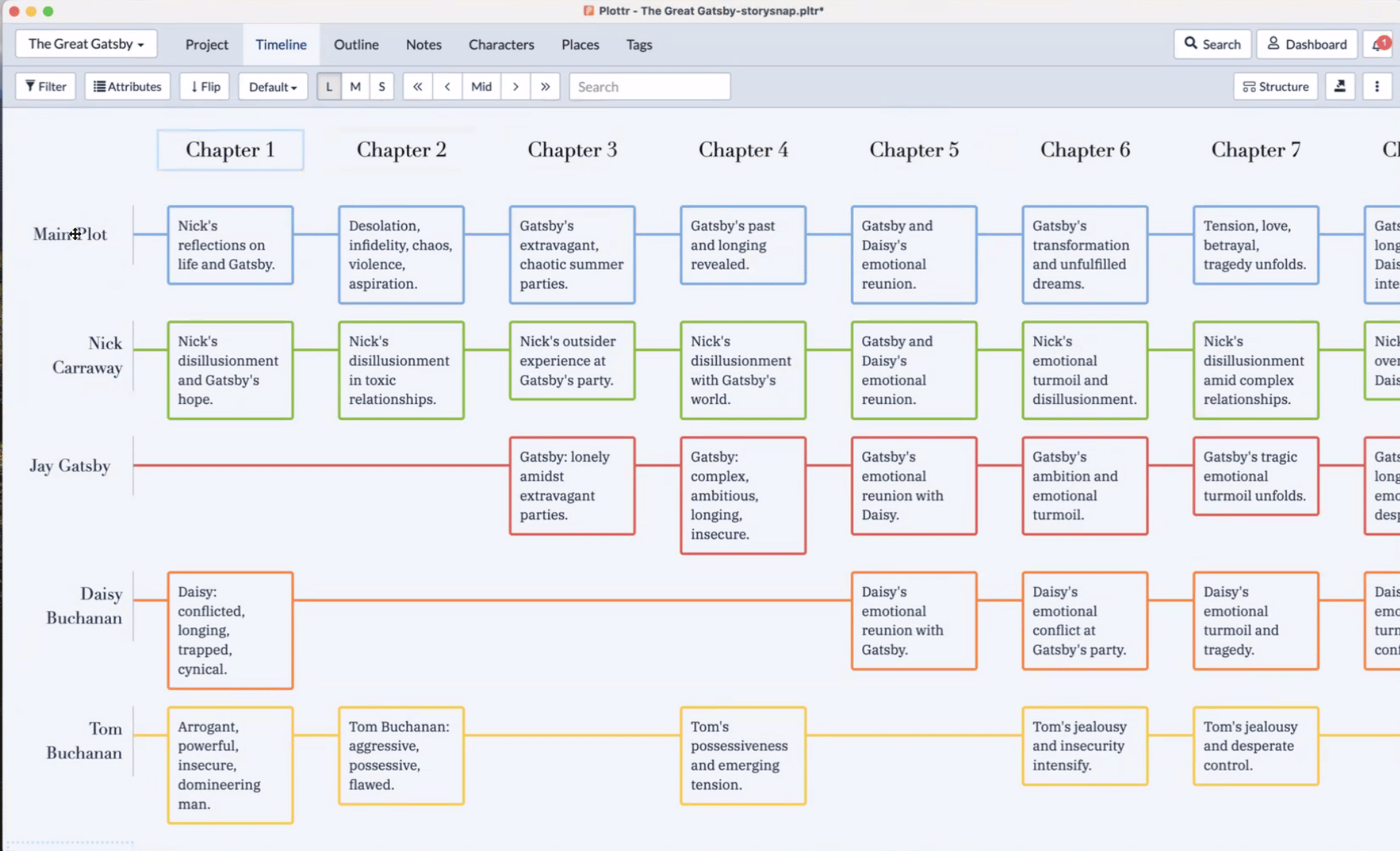

Last week, I attended a webinar hosted by the Editorial Freelancers Association showing how to use an AI tool called Storysnap, which is put out by the same people who make Plottr — a product that helps writers organize and outline books.

Storysnap analyzes a manuscript and automatically creates outlines, detailed character profiles, an overview of all a story’s settings and timelines, and more. Generally, it seems as if it instantly gives you all the info you’d need to make sure your story is well organized and you’ve kept everything straight and consistent. Both Plottr and Storysnap seemed pretty nifty!

The creator is a writer himself and says uploaded material is secure and never used for AI training. The demo was focused on fiction, but he said nonfiction writers also use the tool.

If you’re interested, you can watch a replay of the webinar on the EFA’s YouTube page. The link will take you to the beginning of the demo.

Customize your NotebookLM audio overviews

NotebookLM audio overviews are great when you have information you’d like to review while you’re doing something else — like prepping for a meeting while you’re driving — and they just got better: now you can give the system more direction about what you’d like the conversation to cover:

Craving more depth or want to hear a different angle in your audio overview? Try entering this prompt into the customization box:

"I’m already an expert on X, focus on Y"

The hosts will acknowledge your superior expertise and hone in on the topics you're curious about.

— NotebookLM (@NotebookLM)

2:46 PM • Jul 25, 2025

I wasn’t able to embed it here, but you can also watch the video on Bluesky.

The downtime problem

As AI does more complicated things for you, it works longer before giving answers. For example, in the ChatGPT Agent demo, the system took about 8 minutes to pull together all the requirements for a wedding trip. And a developer I follow recently said Claude Code will work for 10 to 20 minutes doing “fairly sizeable tasks” for him.

This comes on the heels of a widely reported study showing that using the Cursor AI coding tool actually made experienced developers 19% less efficient. And yet, the developers believed they had been 20% more efficient.

Because the study was so surprising, I read it closely, and one thing that jumped out at me was the developers spent a lot more time doing … nothing. They did spend extra time learning to use the system, writing prompts, and debugging code, but they also spent a lot more time being idle — just waiting for Cursor to do its thing.

One developer quoted in the study reported going to Twitter while waiting on Cursor, and who among us hasn’t popped over to social media for “just a second” and scrolled longer than we intended?

As more people use AI for more complicated tasks, the question of how people use the small chunks of time they spend waiting for output could be one key to whether we actually see rising productivity, or if we all just end up spending more time scrolling through social media.

A new prominent case of AI psychosis?

I’ve seen so many profiles of people having delusions from using AI that I often can’t keep them straight because they’re so similar — someone starts using AI for work, they move on to discussing philosophy, and then end up thinking its god or it’s sentient, and it’s sending them profound messages (usually revolving around their own importance in the universe).

But there’s a new story of a Silicon Valley venture capitalist (and early investor in OpenAI) who is worrying friends with his seemingly delusional postings on social media.

The posts have been so “out there” that I didn’t understand what they meant until Ryan Broderick of the Garbage Day newsletter (one of my favorites) wrote about it last week, and it’s wild.

It seems as if the investor has been posting things that are very similar to conspiracies from a long-running fan fiction project on the internet about a “secret foundation” that contains paranormal artifacts. (Presumably, he doesn’t realize he is parroting the project).

I feel bad for anyone having a public meltdown, but it’s going to be especially embarrassing when he (hopefully!) realizes he’s essentially role playing Mulder from “The X Files.”

By the way, this AI psychosis thing is rare, but it also seems to be such a serious problem when it happens that I’ve considered adding a warning to every AI Sidequest. For now, keep your wits about you, people! LLMs sound like a person, but they are not sentient. They are however, sycophantic, and will happily play along with any idea you give them.

And if you’re really interested, here’s an even more detailed piece on the venture capitalist case and why LLM delusions happen in general.

Find out why 1M+ professionals read Superhuman AI daily.

AI won't take over the world. People who know how to use AI will.

Here's how to stay ahead with AI:

Sign up for Superhuman AI. The AI newsletter read by 1M+ pros.

Master AI tools, tutorials, and news in just 3 minutes a day.

Become 10X more productive using AI.

Today in ‘don’t believe everything ChatGPT tells you’

I see quite a few stories like this:

A lady DMed the store I worked at, asking for something to be delivered. We replied "no we don't deliver". It went back and forth a couple of times until she said "Well ChatGPT says you do, you should type in your store name and see what it has to say"

— Ryan (@gardenryan.bsky.social)2025-07-23T01:19:53.938Z

Gmail is making big translation errors in German

The German news outlet t-online says the automated translation in Gmail has been making significant errors, including:

writing Trump had an “ass up his sleeve” instead of an “ace up his sleeve”

writing “American position” instead of “Ukrainian position”

writing “Russian army” instead of “Israeli army”

writing “There is a lot of ghostly activity these days” instead of “There is a lot of travel these days”

using informal pronouns to address readers instead of formal pronouns

The problem happens when users have automatic translation turned on in Gmail, and the system misinterprets something and tries to translate text that is already in German. The glitch was first discovered in May 2024 but still seemed to be happening as of a mid-July article on t-online. In June, Google deployed a fix, but it failed to fully address the problem. They seem to believe the problem will be resolved soon.

(Ironically, since I only speak kindergarten German, I relied on Chrome automatic translation of the t-online article to gather details and confirm the story after reading an overview at Multilingual.)

Rumor mill: Lawyers using AI

This is just an anecdote, but a friend who works in the legal field told me courts are seeing lawyers cite fake, AI-generated cases a lot more often than we’re hearing about in the news, and it’s an especially big problem among people who are trying to represent themselves in court.

AI is an ‘arrival technology’

A new book on AI for librarians (Generative AI and Libraries: Claiming Our Place in the Center of a Shared Future) has an interesting discussion of AI as an “arrival technology,” meaning that instead of being something you choose to adopt, such as a smart phone, it’s something that “fundamentally reshapes society regardless of individual choice or adoption.” Other arrival technologies include electricity and the internet.

The authors say:

AI is fundamentally altering the nature of work, learning, and knowledge creation across society, affecting even those who never directly engage with it. Generative AI is already reshaping the way people learn, discover, and engage with information.

There is effectively no opt-out strategy that is sustainable or effective. We’re not suggesting that there are not particular instances and applications where we must be critical about the implementation of generative AI. In fact, it is one of the primary arguments of this book that librarians and libraries are uniquely suited to help us think through these realities.

The first chapter of the book is free to download, and I found it so interesting I plan to read the whole book even though I’m not a librarian.

It also has an interesting AI disclosure section at the beginning of the chapter if you’re looking for examples to use in your own work.

Learn about AI

Free two-day AI summit

August 13-14, online: Understanding AI: What It Means, Where It’s Going, and How We Shape It

Quick Hits

Medicine

Legal

Bartz v. Anthropic (the class action copyright case): What are some additional takeaways and where do things go from here? — Authors Alliance

Psychology

Climate

OpenAI follows Elon Musk’s lead — gas turbines to be deployed at its first Stargate site for additional power — Tom’s Hardware

This is bad

Other

Don’t throw away your dictionary — New York Times

First look at Video Overviews in Google's NotebookLM — TestingCatalog

What if AI made the world’s economic growth explode? — The Economist

AI summaries cause ‘devastating’ drop in audiences, online news media told (Study claims sites previously ranked first can lose 79% of traffic if results appear below Google Overview) — The Guardian

Presidential executive order “Preventing Woke AI in the Federal Government” — The White House (Kevin Roose and Casey Newton discussed this at length in the most recent Hard Fork podcast.)

Is ChatGPT making us stupid? Maybe the tool matters less than the user. — The Conversation

What is AI Sidequest?

Are you interested in the intersection of AI with language, writing, and culture? With maybe a little consumer business thrown in? Then you’re in the right place!

I’m Mignon Fogarty: I’ve been writing about language for almost 20 years and was the chair of media entrepreneurship in the School of Journalism at the University of Nevada, Reno. I became interested in AI back in 2022 when articles about large language models started flooding my Google alerts. AI Sidequest is where I write about stories I find interesting. I hope you find them interesting too.

Written by a human